•

•

•

•

•

•

•

RDMA

and

RDMA

over

Converg

ed

Ethernet

(RoCE)

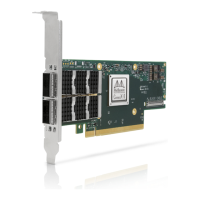

ConnectX-6, utilizing IBTA RDMA (Remote Data Memory Access) and RoCE (RDMA over Converged

Ethernet) technology, delivers low-latency and high-performance over InfiniBand and Ethernet

networks. Leveraging datacenter bridging (DCB) capabilities as well as ConnectX-6 advanced

congestion control hardware mechanisms, RoCE provides efficient low-latency RDMA services

over Layer 2 and Layer 3 networks.

NVIDIA

PeerDire

ct™

PeerDirect™ communication provides high efficiency RDMA access by eliminating unnecessary

internal data copies between components on the PCIe bus (for example, from GPU to CPU), and

therefore significantly reduces application run time. ConnectX-6 advanced acceleration

technology enables higher cluster efficiency and scalability to tens of thousands of nodes.

CPU

Offload

Adapter functionality enables reduced CPU overhead leaving more CPU resources available for

computation tasks.

Open vSwitch (OVS) offload using ASAP

2(TM)

• Flexible match-action flow tables

• Tunneling encapsulation/decapsulation

Quality

of

Service

(QoS)

Support for port-based Quality of Service enabling various application requirements for latency

and SLA.

Hardwar

e-based

I/O

Virtualiz

ation

ConnectX-6 provides dedicated adapter resources and guaranteed isolation and protection for

virtual machines within the server.

Storage

Accelera

tion

A consolidated compute and storage network achieves significant cost-performance advantages

over multi-fabric networks. Standard block and file access protocols can leverage:

RDMA for high-performance storage access

NVMe over Fabric offloads for target machine

Erasure Coding

T10-DIF Signature Handover

SR-IOV

ConnectX-6 SR-IOV technology provides dedicated adapter resources and guaranteed isolation

and protection for virtual machines (VM) within the server.

High-

Perform

ance

Accelera

tions

Tag Matching and Rendezvous Offloads

Adaptive Routing on Reliable Transport

Burst Buffer Offloads for Background Checkpointing

Loading...

Loading...