Appendix 1 special soft device list

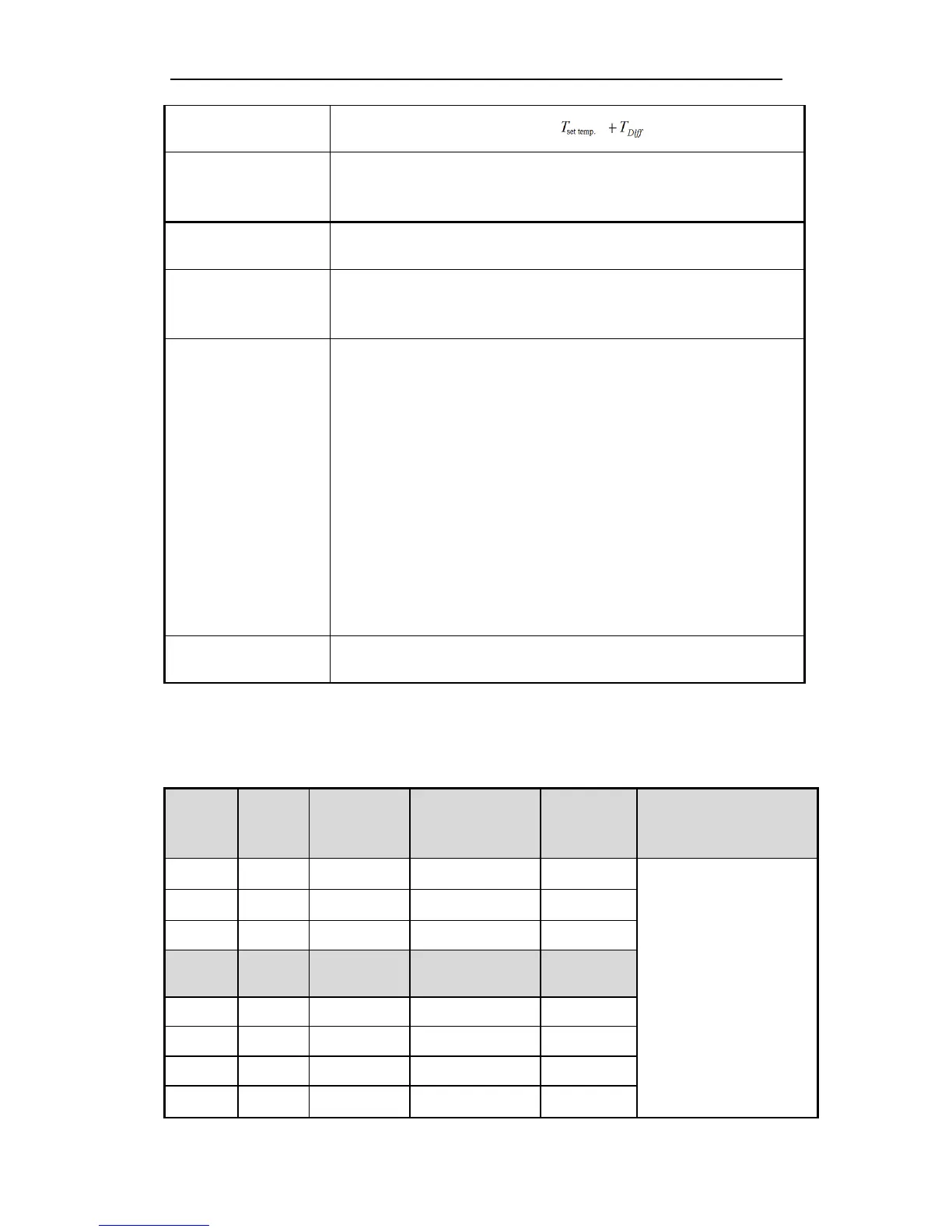

1CH QD101

- - -

XC-E2AD2PT2DA

COMMENTS AND DESCRIPTIONS RELATIVE

PARAMETERS

CH PT0(0.01℃)

PT1(0.01℃)

AD0 AD1

Display temperature

(unit: 0.1℃)

module 1

ID100 ID101 ID102 ID103

PID output

(X input which returns

to main unit)

module 1

X100 X101 X102 X103

Connecting status

(0 is connect, 1 is

disconnect)

module 1

X110 X111 X112 X113

PID auto tune error bit

(0 is normal, 1 is

parameters error)

module 1

X120 X121 X122 X123

Enable channel’s

signal

module 1

Y100 Y101 Y102 Y103

Auto tune PID control

bit

Auto tune activate signal, enter auto tune stage if being set to be 1;

when auto turn finish, PID parameters and temperature control cycle value are refreshed,

reset this bit automatically.

Users can also read its status; 1 represents auto tune processing; 0 represents no atto tune or

auto tune finished

PID output value

(operation value)

Digital output value range: 0~4095

If PID output is analogue control (like steam valve open scale or thyistor ON angle),

transfer this value to the analogue output module to realize the control requirements

PID parameters

(P、I、D)

Via PID auto tune to get the best parameters;

If the current PID control can’t fulfill the control requirements, users can also write the PID

parameters according to experience. Modules carry on PID control according to the set PID

parameters.

PID operation range

(Diff)

(unit: 0.1℃)

PID operation activates between ±Diff range. In real temperature control environments, if

the temperature is lower than , PID output the max value; if the temperature is

higher than , PID output the mini value;

Temperature difference

δ

(unit: 0.1℃)

(sample temperature+ Temperature difference δ)/10=display temperature value. Then

temperature display value can equal or close to the real temperature value. This parameter

has sign (negative or positive). Unit is 0.1℃, the default value is 0.

The set temperature

value(unit: 0.1℃)

Control system’s target temperature value. The range is 0~1000℃, the precision is 0.1℃.

Loading...

Loading...