Appendix A

DisCache

impressive performance gains. saving both seek and latency time -- 27 milliseconds on average -- when

desired data resides in the cache.

MAXIMUM FLEXIBILITY, EASY TO USE

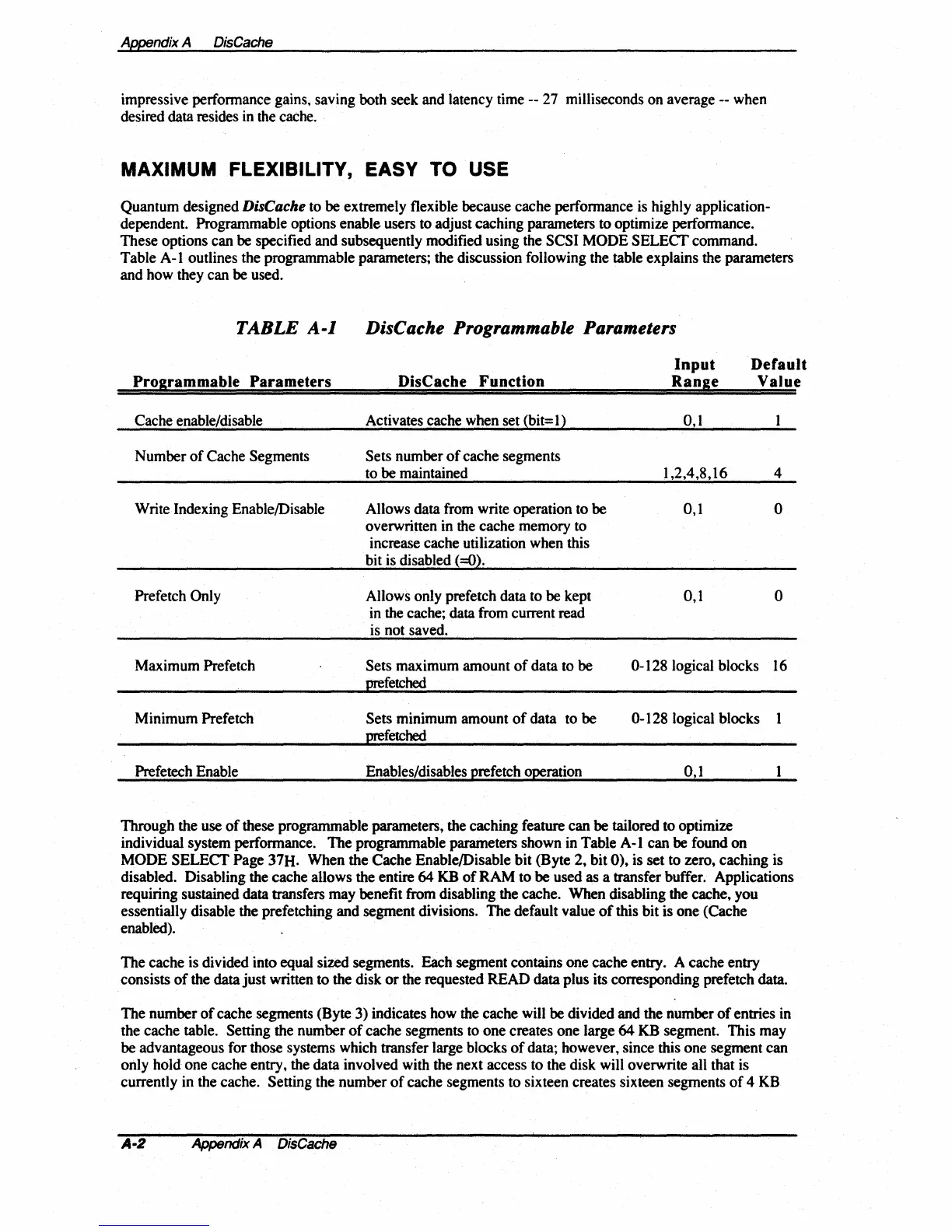

Quantum designed DisCache to be extremely flexible because cache performance is highly application-

dependent. Programmable options enable users to adjust caching parameters to optimize performance.

These options can be specified and subsequently modified using the

SCSI MODE

SELECf

command.

Table A-I outlines the programmable parameters; the discussion following the table explains the parameters

and how they can be used.

TABLE

A-I

Pro

ram

mabie Parameters

Cache enable/disable

Number

of

Cache Segments

Write Indexing Enable/Disable

Prefetch Only

Maximum Prefetch

Minimum Prefetch

Prefetech Enable

DisCache Programmable Parameters

DisCache Function

Activates cache when set (bit= I)

Sets number

of

cache segments

to be maintained

Allows data from write operation to be

overwritten in the cache memory to

increase cache utilization when this

bit is disabled

(=0).

Allows only prefetch data to be kept

in the cache; data from current read

is not saved.

Sets maximum amount

of

data to be

prefetched

Sets minimum amount

of

data to be

prefetched

Enables/disables prefetch operation

Input

Ran

e

0,1

1,2,4,8,16

0,1

0,1

Default

Value

4

o

o

0-128 logical blocks

16

0-128 logical blocks I

0,1

Through the use

of

these programmable parameters, the caching feature can be tailored to optimize

individual system performance. The programmable parameters shown in Table A-I can be found

on

MODE SELECT Page 31H. When the Cache Enable/Disable bit (Byte 2, bit

0),

is set to zero, caching is

disabled. Disabling the cache allows the entire

64

KB

of

RAM to be used as a transfer buffer. Applications

requiring sustained data transfers may benefit from disabling the cache. When disabling the cache, you

essentially disable the prefetching and segment divisions. The default value

of

this bit is one (Cache

enabled).

The cache is divided into equal sized segments. Each segment contains one cache entry. A cache entry

consists

of

the data just written to the disk

or

the requested READ data plus its corresponding prefetch data.

The number

of

cache segments (Byte 3) indicates how the cache will be divided and the number

of

entries in

the cache table. Setting the number

of

cache segments to one creates one large

64

KB segment. This may

be advantageous for those systems which transfer large blocks

of

data; however, since this one segment can

only hold one cache entry, the data involved with the next access to the disk will overwrite all that is

currently in the cache. Setting the number

of

cache segments to sixteen creates sixteen segments

of

4

KB

A-2

Appendix A

DisCache

Loading...

Loading...