1 Introduction

5

1 Introduction

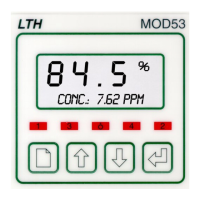

ABOUT THE MOD53

The MOD53 is a microprocessor controlled dissolved oxygen measurement

instrument. It uses a multifunction LCD to display readings and provide feedback

to the operator. Different options provide fully configurable control, alarm and

feedback with up to four relays and two 0/4-20mA current output sources.

UNIT SPECIFICATION

Sensor Input Galvanic (Mackereth) 0 to 9.999mA or Polargraphic

(Clark) 0 to 500.0nA.

Sensor Bias Voltage Software Programmable, -1.000 to +1.000V,

Resolution ±1mV, Output Accuracy ±3mV

Sensor membrane

correction factor

Software Programmable

0 to 9999

Sensor Cable Up to 100 metres

Ranges of

Measurement

0 - 199.9 % saturation, 0 - 30.00 ppm Concentration,

0 – 999.9 mmHg (Calibration specific)

0 – 9999 mBar pO

2

(Calibration specific)

Accuracy

±3µA (Galvanic Mode), ±1.0nA (Polargraphic Mode)

Linearity

±0.1% of Range

Repeatability

±0.1% of Range

Temperature Sensor 4 wire interface, operating with up to 100 metres of

cable. Software selectable sensor type including

PT100 & PT1000 RTD.

Measurement Range

-50°C to +300°C (when using PT100 or PT1000)

Temperature Accuracy

±0.2°C (Dependant on Sensor Configuration)

Operator Adjustment

(Temperature)

± 50°C, or ± 122°F

Temperature

Compensation

Automatic, or Manually set from 0°C to 100°C

Pressure

Compensation

Actively from 4-20 mA input (Direct or 24V loop

powered from the MOD53.) Software Scalable.

or User Programmable from 0.50 – 9.99 bar

With user Selectable Pressure Damping

Salinity Compensation User Programmable from 0 – 40.0 ppt

Ambient Operating

Temperature

-20°C to +50°C (-4°F to +122°F) for full specification.

Ambient temperature

variation

±0.01% of range / °C (typical)

Loading...

Loading...