82 AMCC Proprietary

Revision 1.02 - September 10, 2007

PPC405 Processor

Preliminary User’s Manual

The DCU can accept up to three outstanding store commands before stalling the CPU pipeline for additional data

cache commands.

The DCU can have two flushes pending before stalling the CPU pipeline.

DCU cache operations other than loads and stores stall the CPU pipeline until all prior data cache operations

complete. Any subsequent data cache command will stall the pipeline until the prior operation is complete.

3.7.2 Cache Operation Priorities

The DCU uses a priority signal to improve performance when pipeline stalls occur. When the pipeline is stalled

because of a data cache operation, the DCU asserts the priority signal to the PLB. The priority signal tells the

external bus that the DCU requires immediate service, and is valid only when the data cache is requesting access

to the PLB. The priority signal is asserted for all loads that require external data, or when the data cache is

requesting the PLB and stalling an operation that is being presented to the data cache.

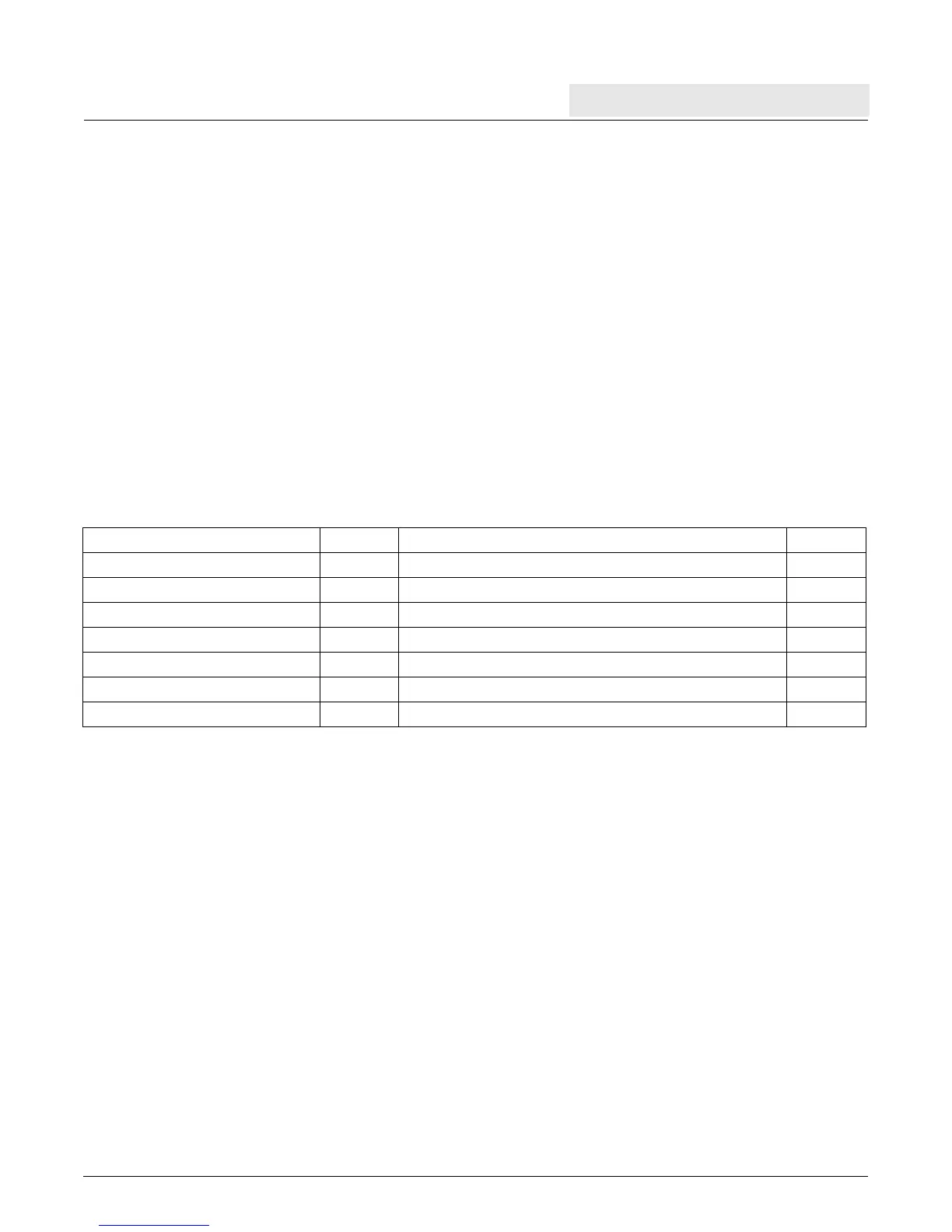

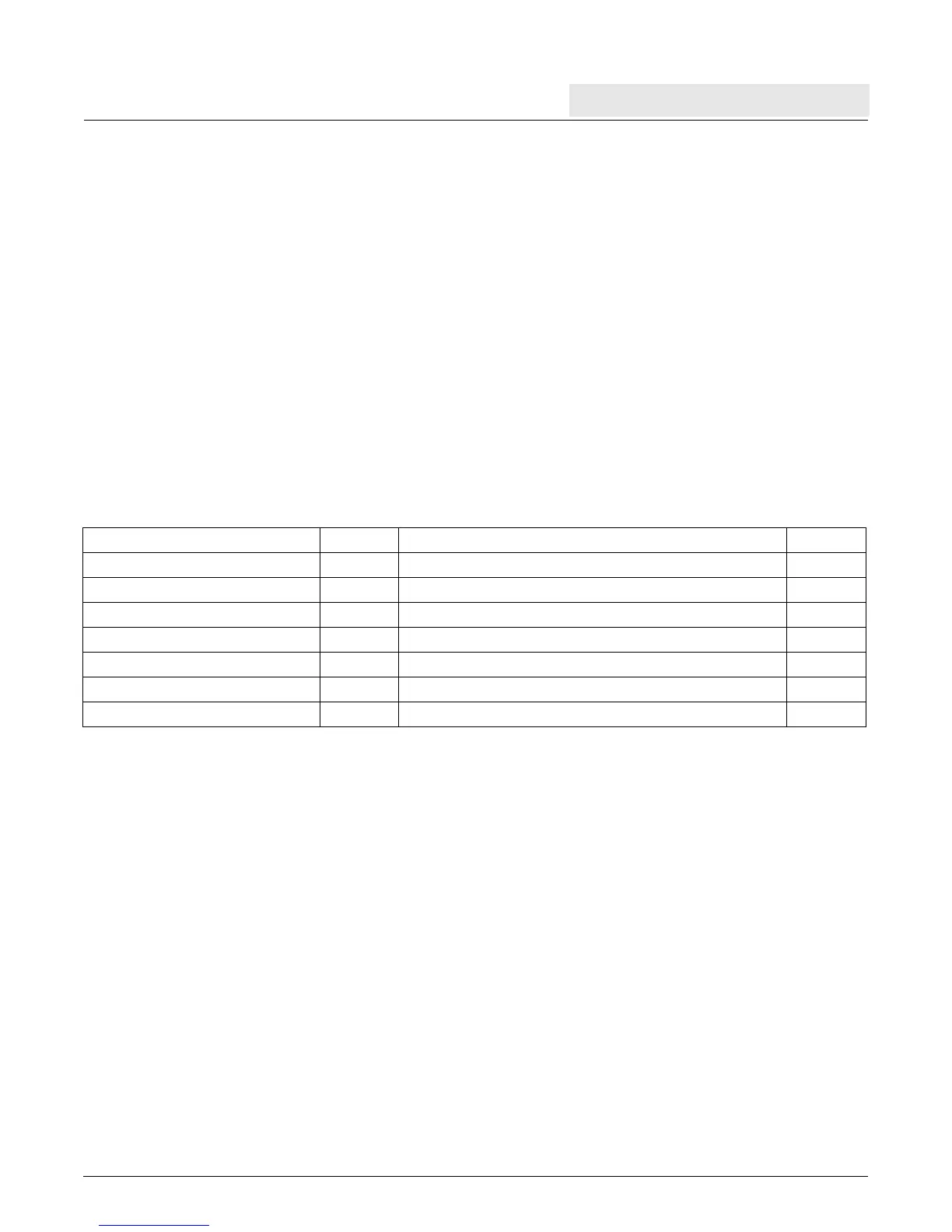

Table 3-3 provides examples of when the priority is asserted and deasserted.

3.7.3 Simultaneous Cache Operations

Some cache operations can occur simultaneously to improve DCU performance. For example, combinations of line

fills, line flushes, word load/stores, and operations that hit in the cache can occur simultaneously. Cache operations

other than loads/stores cannot begin until the PLB completes all previous operations.

3.7.4 Sequential Cache Operations

Some common cache operations, when performed sequentially, can limit DCU performance: sequential

loads/stores to non cacheable storage regions, sequential line fills, and sequential line flushes.

In the case of sequential cache hits, the most commonly occurring operations, the DCU loads or stores data every

cycle. In such cases, the DCU does not limit performance.

However, when a load from a non cacheable storage region is followed by multiple loads from noncallable regions,

the loads can complete no faster than every four cycles, assuming that the addresses are accepted during the

same cycle in which it is requested, and that the data is returned during the cycle after the load is accepted.

Similarly, when a store to a non cacheable storage region is followed by multiple stores to non cacheable regions

the fastest that the stores can complete is every other cycle. The DCU can have accepted up to three stores before

additional DCU commands will stall waiting for the prior stores to complete.

Table 3-3. Priority Changes With Different Data Cache Operations

Instruction Requesting PLB Priority Next Instruction Priority

Any load from external memory 1 N/A N/A

Any store 0 Any other cache operation not being accepted by the DCU. 1

dcbf 0 Any cache hit. 0

dcbf/dcbst 0 Load non-cache. 1

dcbf/dcbst 0 Another command that requires a line flush. 1

dcbt 0 Any cache hit. 0

dcbi/dccci/dcbz 0N/A N/A

Loading...

Loading...